AI chatbots have become integral to modern business operations, offering personalized customer support, handling queries, and automating routine tasks. However, with the increasing reliance on AI chatbots, concerns around privacy and security are growing. Users frequently share sensitive information with chatbots, and ensuring the protection of this data is crucial for maintaining trust. This blog explores the privacy and security challenges in AI chatbots, how these challenges can be addressed, and the future of secure chatbot interactions.

Table Of Contents

- 0.1 The Rise of AI Chatbots in Business Operations

- 0.2 Privacy Challenges in AI Chatbots

- 0.3 Security Risks Associated with AI Chatbots

- 0.4 Addressing Privacy and Security Challenges in AI Chatbots

- 0.5 Regulatory and Ethical Considerations in Chatbot Privacy

- 0.6 Future Trends in AI Chatbot Security

- 0.7 Conclusion

- 0.8 References

- 1 Venkateshkumar S

The Rise of AI Chatbots in Business Operations

AI-powered chatbots are now widely deployed across various industries, including e-commerce, banking, healthcare, and customer service. These chatbots use machine learning (ML) algorithms and natural language processing (NLP) to understand and respond to customer inquiries efficiently, providing 24/7 support.

- Statista predicts that the global chatbot market will reach $1.25 billion by 2025, reflecting the growing dependence on AI-driven technologies.

- However, as chatbot usage increases, so do concerns around data privacy and security, especially given that chatbots handle sensitive user information.

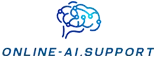

Privacy Challenges in AI Chatbots

While chatbots streamline operations, they also introduce several privacy concerns:

Data Collection and Retention

Chatbots collect large amounts of data, including names, contact information, payment details, and even sensitive personal data like medical or financial information. The risk lies in how this data is stored, shared, and used.

- Many chatbots retain user conversations for training purposes, but improper handling or insufficient safeguards can expose this data to unauthorized access or breaches.

Lack of Transparency

Users often aren’t fully aware of how much data chatbots are collecting or where it is being stored. A lack of clear communication about privacy policies can damage user trust, especially if data is misused or stored indefinitely without consent.

Cross-Platform Vulnerabilities

AI chatbots frequently integrate with third-party platforms like CRM systems, databases, and payment gateways. Each integration point increases the risk of data breaches, as attackers can exploit vulnerabilities in one system to gain access to another.

Security Risks Associated with AI Chatbots

In addition to privacy risks, AI chatbots face several security challenges, particularly as they become targets for cyberattacks.

Injection Attacks

Hackers can insert malicious code into chatbot conversations to manipulate the system. These injection attacks could grant unauthorized access, expose sensitive data, or even spread malware through the chatbot interface.

Identity Theft and Phishing

Cybercriminals may exploit AI chatbots by posing as legitimate users to gain sensitive information. Phishing attacks could also use chatbots to impersonate businesses, tricking users into sharing passwords, credit card details, or other personal data.

Weak Authentication Mechanisms

Many chatbots have insufficient authentication processes, making them vulnerable to unauthorized interactions. Without multi-factor authentication (MFA)or robust security measures, hackers can easily exploit chatbots to infiltrate systems.

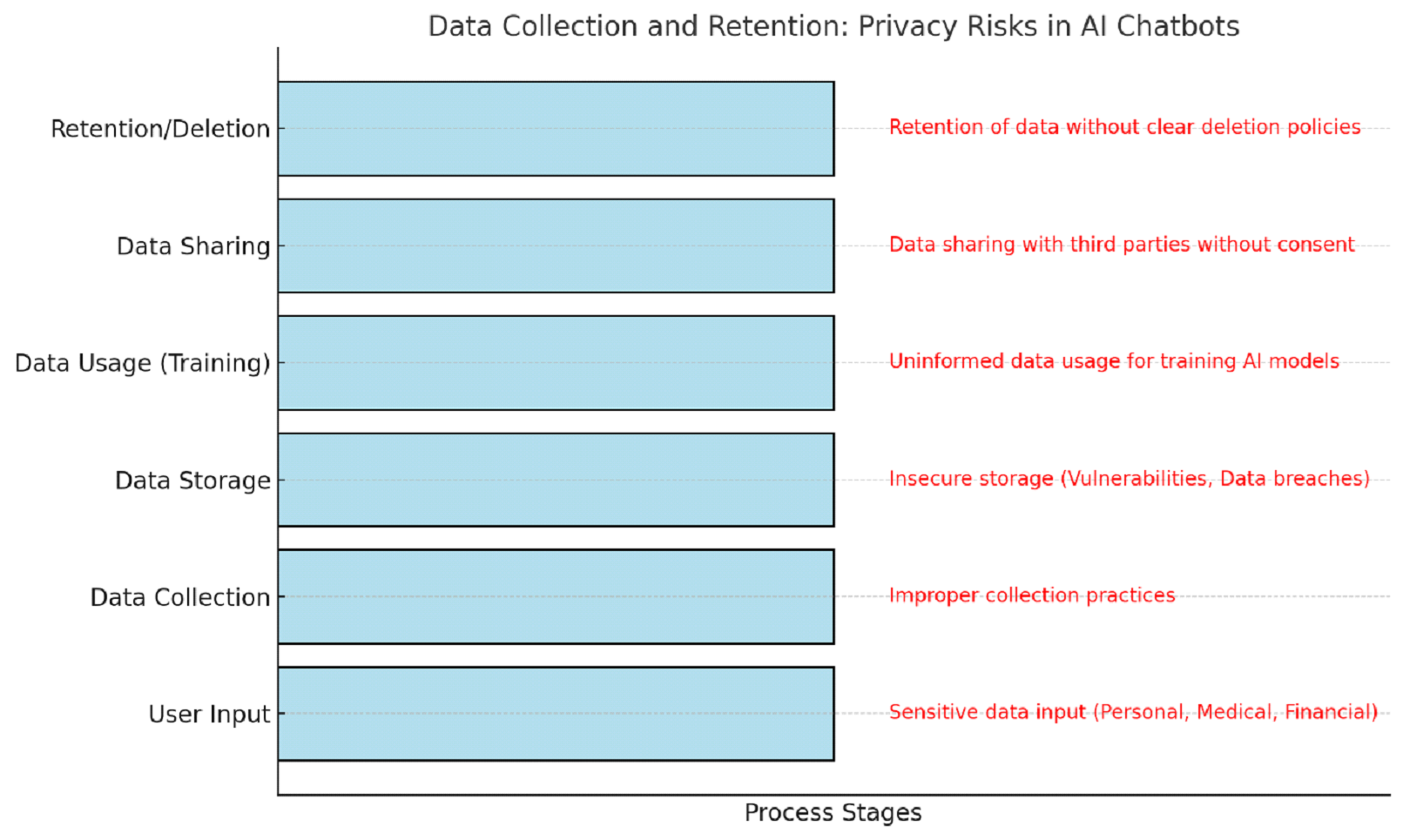

Addressing Privacy and Security Challenges in AI Chatbots

To mitigate the privacy and security risks of chatbots, businesses must prioritize securing their systems. Here are some effective strategies:

Data Encryption

Encryption is one of the most reliable methods for protecting data. End-to-end encryption ensures that all communications between the user and the chatbot are secure, reducing the risk of interception by malicious actors.

- Forbes highlights that encryption is critical for industries like finance and healthcare, which manage highly sensitive data.

User Consent and Transparency

Organizations must provide transparent privacy policies that clearly explain what data is being collected, why it is collected, and how it will be used.Opt-in consent mechanisms empower users to control what data they share with the chatbot.

- The General Data Protection Regulation (GDPR) emphasizes the need for transparency and informed consent, with strict penalties for non-compliance.

Robust Authentication Methods

Incorporating strong authentication protocols, such as MFA or biometric verification, can significantly reduce the risk of unauthorized access. Regular security updates and patches should also be implemented to address known vulnerabilities.

Regular Audits and Penetration Testing

Routine security audits and penetration tests can help identify and fix vulnerabilities before they are exploited. Regular testing ensures that chatbots remain compliant with the latest security standards.

Regulatory and Ethical Considerations in Chatbot Privacy

Governments and regulatory bodies are introducing measures to ensure that AI chatbots meet privacy and security standards. The GDPR in the European Union is one of the strictest data protection laws globally, setting a high benchmark for data privacy regulations.

- Under GDPR, users have the right to access, modify, and delete their data, and companies must ensure that chatbots adhere to these regulations.

- Similarly, the California Consumer Privacy Act (CCPA) gives consumers more control over their personal data, requiring businesses to disclose how they collect and use that data.

Ensuring compliance with these regulations not only helps businesses avoid legal penalties but also fosters trust, which is critical for long-term customer loyalty.

Future Trends in AI Chatbot Security

As AI chatbots become more advanced, so too will the security threats they face. However, several emerging technologies and practices promise to strengthen chatbot security in the future.

AI-Driven Security Solutions

AI can be used to enhance security by identifying and preventing breaches in real-time. AI-driven threat detection systems can monitor chatbot interactions for suspicious behavior, alerting security teams to potential threats before they escalate.

Blockchain for Data Security

Blockchain technology could revolutionize chatbot security by providing a decentralized and tamper-proof method for storing user data. By recording interactions on an immutable ledger, blockchain ensures that chatbot conversations are secure and resistant to manipulation.

Privacy-Enhancing Technologies (PETs)

Technologies like differential privacy and homomorphic encryption allow chatbots to utilize data without exposing sensitive information. These tools are crucial for improving user privacy, as they enable secure data processing without compromising confidentiality.

Conclusion

As AI chatbots continue to play a vital role in business operations, addressing their privacy and security challenges is essential. Businesses must prioritize data protection, ensure transparency, and implement robust security protocols to create safe, trustworthy chatbot interactions. Emerging technologies like AI-driven security and blockchain promise to further enhance security, ensuring that AI chatbots remain a secure and valuable asset for businesses in the future.

References

- . Statista. “Global Chatbot Market Size.” 2023.

- Forbes. “The Importance of Data Encryption in AI Chatbots.” 2023.

- GDPR. “Key Provisions of the General Data Protection Regulation.” 2023.

- California Consumer Privacy Act (CCPA). “Understanding the Impact of CCPA on Businesses.” 2024.

Let me know if you’d like any additional sections or visual enhancements like SEO-friendly images, infographics, or further insights on chatbot security trends!

ABOUT AUTHOR

Venkateshkumar S

Full-stack Developer

“Started his professional career from an AI Startup, Venkatesh has vast experience in Artificial Intelligence and Full Stack Development. He loves to explore the innovation ecosystem and present technological advancements in simple words to his readers. Venkatesh is based in Madurai.”